Goal and Background

The goal of this lab assignment was to gain experience with measuring and interpreting the spectral reflectance of various Earth features as well as performing basic Earth resource monitoring using remote sensing band ratio techniques.

Methods

Part 1 - Spectral Signature Analysis

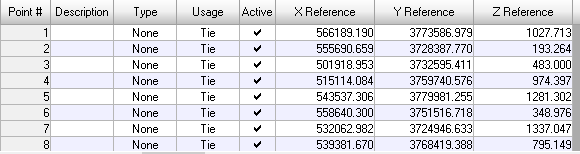

For part one of this lab, the task was to measure the spectral reflectance of 12 different surface features present in the image provided by the professor. These features were standing water, moving water, deciduous forest, evergreen forest, riparian vegetation, crops, dry soil, moist soil, rocks, asphalt highway, airport runway, and concrete surface. To do this, I first used Drawing > Polygon tool to draw a polygon on the feature I wished to view the reflectance of. With this polygon made, the next step is to select Raster > Supervised > Signature Editor tool. Using this tool, I was able to view the reflectance for all 12 of the features needed as well as view a spectral plot of each features signature.

Part 2 - Resource Monitoring

For the second part of this lab, the task was to use band ratio techniques to monitor the health of vegetation as well as the iron content of soil. To first find vegetation health, I used the Raster > Unsupervised > NDVI tool, inputted the correct data, and ran the tool. Then, I took the output image and opened it in Arcmap to create a more visually pleasing map with five distinct classes. To measure soil iron content, the Raster > Unsupervised > Indices tool was used with the 'Ferrous Minerals' function chosen as an input. Once the tool was run, I similarly opened the output image in Arcmap to once again create a better looking map with five distinct classes.

Results

Spectral Reflectance of Standing Water

Spectral Reflectance of Dry vs Moist Soil

Spectral Reflectance of All Features Tested

Sources

Satellite image is from Earth Resources Observation and Science Center, United States Geological Survey