Goal and Background

Lab 4 in GEOG 338 Remote sensing had us explore and become comfortable with many of the miscellaneous image functions that are built into the Erdas Imagine software. The image functions that were used in this lab include image subsetting by created an AOI file, image fusion using the Resolution Merge tool, radiometric enhancements using the Haze Reduction tool, linking the Erdas Imagine viewer to Google Earth, changing image resolution using the Resample Pixel Size tool, image mosaicing using both the Mosaic Express tool and the MosaicPro tool, and image differencing by using the Model Maker to create an image showing change from one year to another.

Methods

Part 1

To create image subsets, the first step is to import the provided image into the Erdas Imagine Viewer. Next, using the Raster toolbar, create an Inquire Box on the image and position where necessary. Then from the Raster toolbar click the Subset & Chip button and click on the Create Subset Image tool and create and save the image from the Inquire Box. To create a subset image from an AOI file you must load both the image and a shapefile of the area desired into the Erdas Imagine viewer. Then, highlight the shapefile in the viewer and hot the Paste From Selected Layer button on the home tab and then use the Subset & Chip button like earlier to create the image.

Part 2

To merge two images to increase the spatial resolution of an image you must first open the Raster tab in Erdas and click the Pan Sharpen drop down then select the Resolution Merge tool. Select the images you wish to fuse together to pan sharpen them and select which resampling technique to use, in this case Nearest Neighbor was used.

Part 3

To improve image spectral and radiometric quality, haze rudction can be used. To do this, go to the Raster toolbar and click on the Radiometric drop down menu before selecting the Haze reduction tool. Select the image you wish to run the tool on then allow it to run, giving you an image of much better quality.

Part 4

To link your image in the Erdas Imagine viewer and Google Earth you must first open up the desired image in Erdas and then click on the Help button on the Home tab. In the search bar type in 'Google' and click search. Once the results appear, click on the Connect to Google Earth button which will launch Google Earth and then click on both the Match GE to View and the Sync GE to view buttons to fully sync the two views.

Part 5

To resample an image and change its pixel size you must open up the Raster toolbar and click on the Spatial drop down menu. Next, select the Resample Pixel Size tool and select the image you wish to resample. Use the default Nearest Neighbor resample method or the Bilinear Interpolation method and set the pixel dimension to the desired size then click the Square Cells box

Part 6

To mosaic images using the Mosaic Express tool first open the Raster toolbar then click on the Mosaic drop down menu before selecting the Mosaic Express tool. Select which images to add, making sure to put them in the correct load order and then run the tool to receive your mosaiced image output. To do this in the MosaicPro tool click on the same Mosaic drop down menu but instead select the MosaicPro tool option. Bring in both images making sure to select the Compute Active Area option under the Image Area Options tab and insert both images into the MosaicPro window. Next, make sure that the images are loaded in the correct order with the proper one on the bottom. Next, run Color Corrections using the Histogram Matching and use the Overlay Overlap Function. Finally, process your image to create the mosaiced output image.

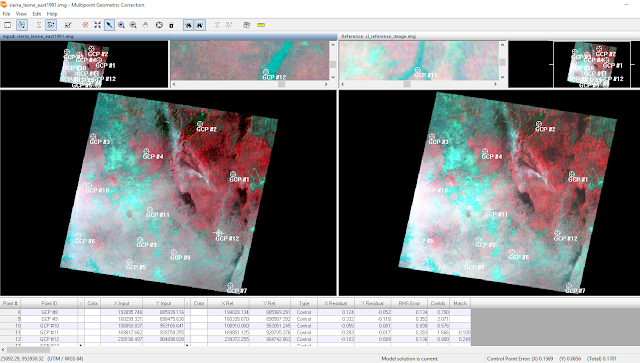

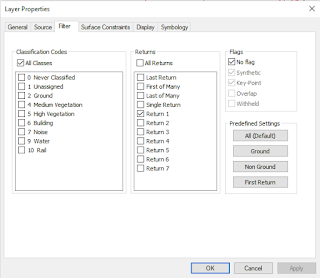

Part 7

To create an image that shows the areas that experienced change between when two images were taken using the model maker you must first open the Model Maker tool under the Toolbox toolbar. Once the Model Maker tool is running, insert two raster objects, a function object, and an output object and connect them all. In the rasters, insert the two images you wish to view the change for then in the function box subtract the values from one image from the other and then add a constant, in this case 127 to get the output. Then using another model, take the output from the first model and run a Either IF OR function using the change/no change threshold calculated from the image metadata to find out which areas experienced changed and which did not. Then, open the output image of this second model in Arcmap and overlay it over the original image showing the study area to create a map showing areas of change.

Results

image subset from Inquire Box

image subset from AOI (area of interest file)

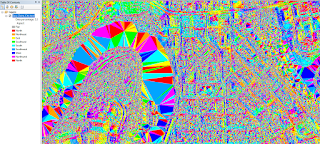

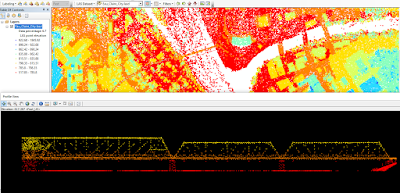

image mosaic using Mosaic Express

image mosaic using MosaicPro

histogram of change image with upper and lower bounds

map showing areas of change from 1991-2011

Sources

Satellite images are from Earth Resources Observation and Science Center, United States Geological Survey. Shapefile is from Mastering ArcGIS 6th edition Dataset by Maribeth Price, McGraw Hill. 2014.